In mathematics, the linear span (also called the linear hull[1] or just span) of a set of elements of a vector space is the smallest linear subspace of that contains It is the set of all finite linear combinations of the elements of S,[2] and the intersection of all linear subspaces that contain It often denoted span(S)[3] or

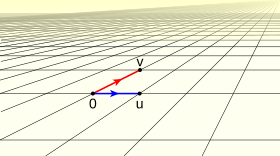

For example, in geometry, two linearly independent vectors span a plane.

To express that a vector space V is a linear span of a subset S, one commonly uses one of the following phrases: S spans V; S is a spanning set of V; V is spanned or generated by S; S is a generator set or a generating set of V.

Spans can be generalized to many mathematical structures, in which case, the smallest substructure containing is generally called the substructure generated by

Definition

editGiven a vector space V over a field K, the span of a set S of vectors (not necessarily finite) is defined to be the intersection W of all subspaces of V that contain S. It is thus the smallest (for set inclusion) subspace containing W. It is referred to as the subspace spanned by S, or by the vectors in S. Conversely, S is called a spanning set of W, and we say that S spans W.

It follows from this definition that the span of S is the set of all finite linear combinations of elements (vectors) of S, and can be defined as such.[4][5][6] That is,

When S is empty, the only possibility is n = 0, and the previous expression for reduces to the empty sum.[a] The standard convention for the empty sum implies thus a property that is immediate with the other definitions. However, many introductory textbooks simply include this fact as part of the definition.

When is finite, one has

Examples

editThe real vector space has {(−1, 0, 0), (0, 1, 0), (0, 0, 1)} as a spanning set. This particular spanning set is also a basis. If (−1, 0, 0) were replaced by (1, 0, 0), it would also form the canonical basis of .

Another spanning set for the same space is given by {(1, 2, 3), (0, 1, 2), (−1, 1⁄2, 3), (1, 1, 1)}, but this set is not a basis, because it is linearly dependent.

The set {(1, 0, 0), (0, 1, 0), (1, 1, 0)} is not a spanning set of , since its span is the space of all vectors in whose last component is zero. That space is also spanned by the set {(1, 0, 0), (0, 1, 0)}, as (1, 1, 0) is a linear combination of (1, 0, 0) and (0, 1, 0). Thus, the spanned space is not It can be identified with by removing the third components equal to zero.

The empty set is a spanning set of {(0, 0, 0)}, since the empty set is a subset of all possible vector spaces in , and {(0, 0, 0)} is the intersection of all of these vector spaces.

The set of monomials xn, where n is a non-negative integer, spans the space of polynomials.

Theorems

editEquivalence of definitions

editThe set of all linear combinations of a subset S of V, a vector space over K, is the smallest linear subspace of V containing S.

- Proof. We first prove that span S is a subspace of V. Since S is a subset of V, we only need to prove the existence of a zero vector 0 in span S, that span S is closed under addition, and that span S is closed under scalar multiplication. Letting , it is trivial that the zero vector of V exists in span S, since . Adding together two linear combinations of S also produces a linear combination of S: , where all , and multiplying a linear combination of S by a scalar will produce another linear combination of S: . Thus span S is a subspace of V.

- Suppose that W is a linear subspace of V containing S. It follows that , since every vi is a linear combination of S (trivially). Since W is closed under addition and scalar multiplication, then every linear combination must be contained in W. Thus, span S is contained in every subspace of V containing S, and the intersection of all such subspaces, or the smallest such subspace, is equal to the set of all linear combinations of S.

Size of spanning set is at least size of linearly independent set

editEvery spanning set S of a vector space V must contain at least as many elements as any linearly independent set of vectors from V.

- Proof. Let be a spanning set and be a linearly independent set of vectors from V. We want to show that .

- Since S spans V, then must also span V, and must be a linear combination of S. Thus is linearly dependent, and we can remove one vector from S that is a linear combination of the other elements. This vector cannot be any of the wi, since W is linearly independent. The resulting set is , which is a spanning set of V. We repeat this step n times, where the resulting set after the pth step is the union of and m - p vectors of S.

- It is ensured until the nth step that there will always be some vi to remove out of S for every adjoint of v, and thus there are at least as many vi's as there are wi's—i.e. . To verify this, we assume by way of contradiction that . Then, at the mth step, we have the set and we can adjoin another vector . But, since is a spanning set of V, is a linear combination of . This is a contradiction, since W is linearly independent.

Spanning set can be reduced to a basis

editLet V be a finite-dimensional vector space. Any set of vectors that spans V can be reduced to a basis for V, by discarding vectors if necessary (i.e. if there are linearly dependent vectors in the set). If the axiom of choice holds, this is true without the assumption that V has finite dimension. This also indicates that a basis is a minimal spanning set when V is finite-dimensional.

Generalizations

editGeneralizing the definition of the span of points in space, a subset X of the ground set of a matroid is called a spanning set if the rank of X equals the rank of the entire ground set[7]

The vector space definition can also be generalized to modules.[8][9] Given an R-module A and a collection of elements a1, ..., an of A, the submodule of A spanned by a1, ..., an is the sum of cyclic modules consisting of all R-linear combinations of the elements ai. As with the case of vector spaces, the submodule of A spanned by any subset of A is the intersection of all submodules containing that subset.

Closed linear span (functional analysis)

editIn functional analysis, a closed linear span of a set of vectors is the minimal closed set which contains the linear span of that set.

Suppose that X is a normed vector space and let E be any non-empty subset of X. The closed linear span of E, denoted by or , is the intersection of all the closed linear subspaces of X which contain E.

One mathematical formulation of this is

The closed linear span of the set of functions xn on the interval [0, 1], where n is a non-negative integer, depends on the norm used. If the L2 norm is used, then the closed linear span is the Hilbert space of square-integrable functions on the interval. But if the maximum norm is used, the closed linear span will be the space of continuous functions on the interval. In either case, the closed linear span contains functions that are not polynomials, and so are not in the linear span itself. However, the cardinality of the set of functions in the closed linear span is the cardinality of the continuum, which is the same cardinality as for the set of polynomials.

Notes

editThe linear span of a set is dense in the closed linear span. Moreover, as stated in the lemma below, the closed linear span is indeed the closure of the linear span.

Closed linear spans are important when dealing with closed linear subspaces (which are themselves highly important, see Riesz's lemma).

A useful lemma

editLet X be a normed space and let E be any non-empty subset of X. Then

- is a closed linear subspace of X which contains E,

- , viz. is the closure of ,

(So the usual way to find the closed linear span is to find the linear span first, and then the closure of that linear span.)

See also

editFootnotes

editCitations

edit- ^ Encyclopedia of Mathematics (2020). Linear Hull.

- ^ Axler (2015) p. 29, § 2.7

- ^ Axler (2015) pp. 29-30, §§ 2.5, 2.8

- ^ Hefferon (2020) p. 100, ch. 2, Definition 2.13

- ^ Axler (2015) pp. 29-30, §§ 2.5, 2.8

- ^ Roman (2005) pp. 41-42

- ^ Oxley (2011), p. 28.

- ^ Roman (2005) p. 96, ch. 4

- ^ Mac Lane & Birkhoff (1999) p. 193, ch. 6

Sources

editTextbooks

edit- Axler, Sheldon Jay (2015). Linear Algebra Done Right (PDF) (3rd ed.). Springer. ISBN 978-3-319-11079-0.

- Hefferon, Jim (2020). Linear Algebra (PDF) (4th ed.). Orthogonal Publishing. ISBN 978-1-944325-11-4.

- Mac Lane, Saunders; Birkhoff, Garrett (1999) [1988]. Algebra (3rd ed.). AMS Chelsea Publishing. ISBN 978-0821816462.

- Oxley, James G. (2011). Matroid Theory. Oxford Graduate Texts in Mathematics. Vol. 3 (2nd ed.). Oxford University Press. ISBN 9780199202508.

- Roman, Steven (2005). Advanced Linear Algebra (PDF) (2nd ed.). Springer. ISBN 0-387-24766-1.

- Rynne, Brian P.; Youngson, Martin A. (2008). Linear Functional Analysis. Springer. ISBN 978-1848000049.

- Lay, David C. (2021) Linear Algebra and Its Applications (6th Edition). Pearson.

Web

edit- Lankham, Isaiah; Nachtergaele, Bruno; Schilling, Anne (13 February 2010). "Linear Algebra - As an Introduction to Abstract Mathematics" (PDF). University of California, Davis. Retrieved 27 September 2011.

- Weisstein, Eric Wolfgang. "Vector Space Span". MathWorld. Retrieved 16 Feb 2021.

- "Linear hull". Encyclopedia of Mathematics. 5 April 2020. Retrieved 16 Feb 2021.

External links

edit- Linear Combinations and Span: Understanding linear combinations and spans of vectors, khanacademy.org.

- Sanderson, Grant (August 6, 2016). "Linear combinations, span, and basis vectors". Essence of Linear Algebra. Archived from the original on 2021-12-11 – via YouTube.